The Kernel Prompt: Building your own ChatGPT

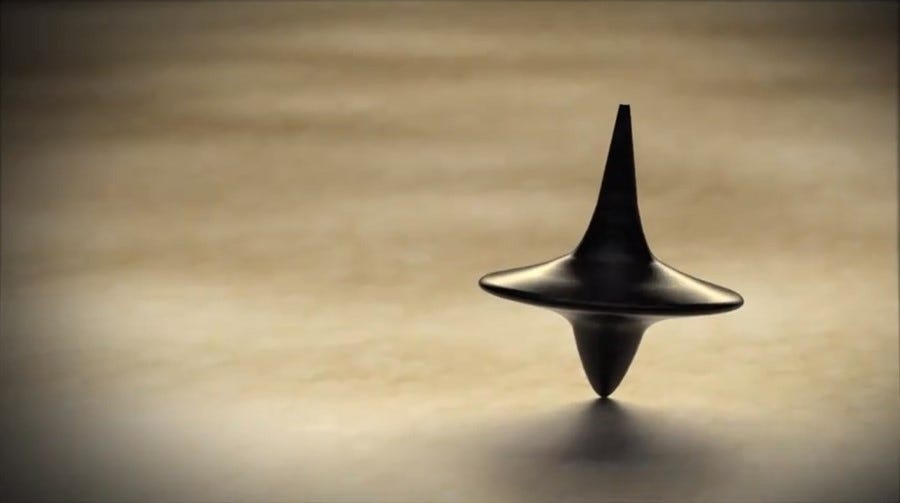

ChatGPT feels miles above GPT-3.5, but what if that’s just on the surface?

The LLM changes the map of what’s easy and what’s hard in unexpected ways. What if all these LLMs trained on internet chat are just intrinsically great at.. internet chat.

They just need the right prompt to kick them into that mode.

This week curates attempts to build (or discover) that prompt. I’m going to call these prompts “kernel prompts” — prompts that act as a seed which causes all future interactions to adopt the behavior of a far more complex system. Complexity which already resided within LLM, but needed to be awakened by the kernel.

A few things to consider while browsing this week’s links:

Prompt Jailbreaking stories feel a lot less like negotiating with alien intelligence and a lot more like ordinary prompt hacking from the Kernel Prompt perspective.

If the Prompt Leak prompt leak stories are genuine, then the LLM has impressive understanding of its own conversation. If they’re not genuine, then the LLM has impressive understanding of its purpose. Either way, the fish is aware of the water.

Like fine-tuning, LLM companies may begin allowing API users to define a region of the input which constitutes the kernel — always to have some level of asserted attention by the model.

As long as we have serious window constraints to our LLMs, ChatGPT-style companies have an amusing “640k is enough for anyone” problem. Their kernel prompt competes for “RAM” with the buffer of user interaction.

Here are this week’s links:

ChatGPT Guess by Riley Goodside

A prompt which induces ChatGPT-like behavior in five short paragraphs. Notably, code blocks and knowledge cutoff seem to work. Measuring the response errors in a prompt like this versus the real ChatGPT should be revealing about OpenAI’s implementation.

(Maybe) YouChat Prompt by @RexDouglass

A claimed retrieval of the YouChat kernel prompt by simply… asking it. I default to believing YouChat is just hallucinating a plausible response.. but it’s a very plausible response. Plus, how would its training set have prepared it to answer this specific question?

(Refuted) Jasper Prompt Guess by @0interestrates

Again, what looks to be a plausible kernel prompt leak. What’s interesting is that Jasper CEO Dave Rogenmoser wrote back to say it wasn’t. If Dave is right, I’m almost MORE impressed with LLMs: hallucinating a plausible kernel prompt requires deep understanding of what the present conversational context is.

(Maybe) Notion Prompt by Shawn Wang

Shawn’s post gives a play-by-play of how one can go about jailbreaking an LLM endpoint, and what Notion’s set of kernel prompts might be. The consistent compactness of these prompts makes me hopeful for a golden area of end-user programming.

Virtual Machine inside ChatGPT by Jonas Degrave

A working example of a kernel prompt that produces a plausible unix shell prompt. All future interactions get replies as if one were using a terminal. Jonas uses the terminal visit ChatGPT’s website via CURL and… create another terminal. Turtles all the way down.

Lisp REPL inside ChatGPT by Max Taylor

I’m being repetitive, but what’s nuts to me is just how little text is required to act as a “kernel” into another mode of operation. Here, Max creates a LISP interpreter with a single paragraph of text. One wonders how much better (or worse!) this interpreter would get if it was trained exclusively on LISP code.

That’s it for this week!

Have a request for a theme or tips on a great project to check out? Let me know!

Shipped with AI is brought to you by Steamship, a package manager for hosted AI apps.